ChatGPT is the latest language model from OpenAI and represents a significant improvement over its predecessor GPT-3. Similarly to many Large Language Models, ChatGPT is capable of generating text in a wide range of styles and for different purposes, but with remarkably greater precision, detail, and coherence. It represents the next generation in OpenAI's line of Large Language Models, and it is designed with a strong focus on interactive conversations.

The creators have used a combination of both Supervised Learning and Reinforcement Learning to fine-tune ChatGPT, but it is the Reinforcement Learning component specifically that makes ChatGPT unique. The creators use a particular technique called Reinforcement Learning from Human Feedback (RLHF), which uses human feedback in the training loop to minimize harmful, untruthful, and/or biased outputs.

We are going to examine GPT-3's limitations and how they stem from its training process, before learning how RLHF works and understand how ChatGPT uses RLHF to overcome these issues. We will conclude by looking at some of the limitations of this methodology.

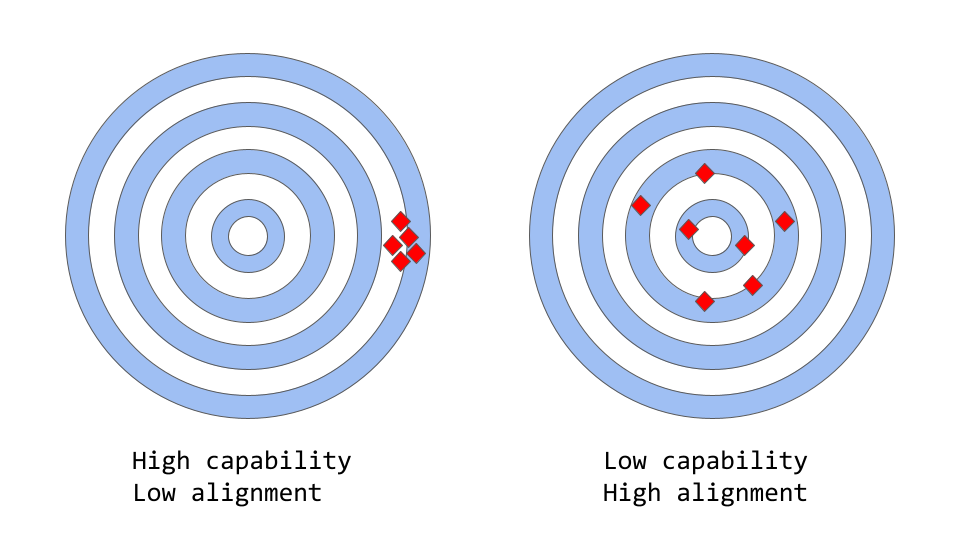

Capability vs Alignment in Large Language Models

In the context of machine learning, the term capability refers to a model's ability to perform a specific task or set of tasks. A model's capability is typically evaluated by how well it is able to optimize its objective function, the mathematical expression that defines the goal of the model. For example, a model designed to predict stock market prices might have an objective function that measures the accuracy of the model's predictions. If the model is able to accurately predict the movement of stock prices over time, it would be considered to have a high level of capability for this task.

Alignment, on the other hand, is concerned with what we actually want the model to do versus what it is being trained to do. It asks the question “is that objective function consistent with our intentions?” and refers to the extent to which a model's goals and behavior align with human values and expectations. For a simple concrete example, say we train a bird classifier to classify birds as either "sparrows" or "robins" and we use log loss (which measures the difference between the predicted probability distribution of the model and the true distribution) as the training objective, even though our ultimate goal is a high classification accuracy. The model might have low log loss, i.e. the model’s capability is high, but poor accuracy on the test set. In fact, the log loss is not perfectly correlated with accuracy in classification tasks. This is an example of misalignment, where the model is capable of optimizing the training objective but poorly aligned with our ultimate goal.

Models like the original GPT-3 are misaligned

Large Language Models, such as GPT-3, are trained on vast amounts of text data from the internet and are capable of generating human-like text, but they may not always produce output that is consistent with human expectations or desirable values. In fact, their objective function is a probability distribution over word sequences (or token sequences) that allows them to predict what the next word is in a sequence (more details on this below).

In practical applications, however, these models are intended to perform some form of valuable cognitive work, and there is a clear divergence between the way these models are trained and the way we would like to use them. Even though a machine calculated statistical distribution of word sequences might be, mathematically speaking, a very effective choice to model language, we as humans generate language by choosing text sequences that are best for the given situation, using our background knowledge and common sense to guide this process. This can be a problem when language models are used in applications that require a high degree of trust or reliability, such as dialogue systems or intelligent personal assistants.

While these powerful, complex models trained on huge amounts of data have become extremely capable in the last few years, when used in production systems to make human lives easier they often fall short of this potential. The alignment problem in Large Language Models typically manifests as:

- Lack of helpfulness: not following the user's explicit instructions.

- Hallucinations: model making up unexisting or wrong facts.

- Lack of interpretability: it is difficult for humans to understand how the model arrived at a particular decision or prediction.

- Generating biased or toxic output: a language model that is trained on biased/toxic data may reproduce that in its output, even if it was not explicitly instructed to do so.

But where does this alignment problem stem from, concretely? Is it the very way language models are trained inherently prone to misalignment?

How language model training strategies can produce misalignment

Next-token-prediction and masked-language-modeling are the core techniques used for training language models, such as transformers. In the first approach, the model is given a sequence of words (or “tokens”, i.e. parts of words) as input and is asked to predict the next word in the sequence. For example, if the model is given the input sentence

"The cat sat on the"

it might predict the next word as "mat", "chair", or "floor", because of the high-probability of occurrence of these words given the previous context; the language model is in fact able to estimate the likelihood of each possible word (in its vocabulary) given the previous sequence.

The masked language modeling approach is a variant of next token prediction, in which some of the words in the input sentence are replaced with a special token, such as [MASK]. The model is then asked to predict the correct word that should be inserted in place of the mask. For example, if the model is given the sentence

"The [MASK] sat on the"

as input, it might predict the next word as "cat", "dog", or "rabbit".

One advantage of these objective functions is that it allows the model to learn the statistical structure of language, such as common word sequences and patterns of word usage. This generally helps the model generate more natural and fluent text, and it is an essential step in the pre-training phase of every language model.

However, these objective functions can also lead to problems, essentially because the model is not capable of distinguishing between an important error and an unimportant one. To make a very simple example, if the model is given the input sentence:

"The Roman Empire [MASK] with the reign of Augustus."

it might predict "began" or “ended”, as both words score high likelihood of occurrence (indeed, both sentences are historically correct), even though the second choice implies a very different meaning.

More generally, these training strategies can lead to a misalignment of the language model for some more complex tasks, because a model which is only trained to predict the next word (or a masked word) in a text sequence, may not necessarily be learning some higher-level representations of its meaning. As a result, the model struggles to generalize to tasks or contexts that require a deeper understanding of language.

Researchers and developers are working on various approaches to address the alignment problem in Large Language Models. ChatGPT is based on the original GPT-3 model, but has been further trained by using human feedback to guide the learning process with the specific goal of mitigating the model’s misalignment issues. The specific technique used, called Reinforcement Learning from Human Feedback, is based on previous academic research. ChatGPT represents the first case of use of this technique for a model put into production.

But how exactly do the creators of ChatGPT use human feedback to attack the alignment problem?

Reinforcement Learning from Human Feedback

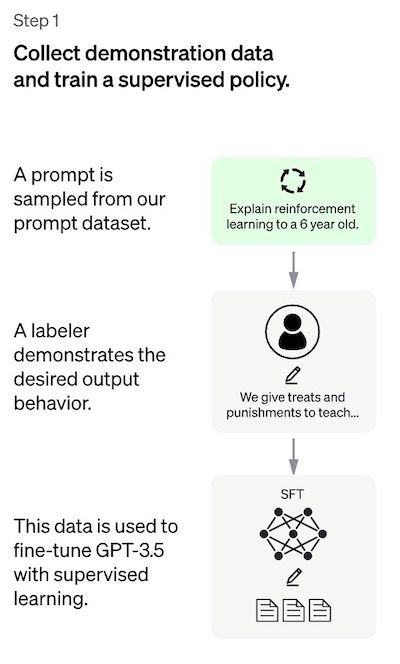

The method overall consists of three distinct steps:

- Supervised fine-tuning – A pre-trained language model is fine-tuned on a relatively small amount of demonstration data curated by labelers, to learn a supervised policy (the SFT model) that generates outputs from a selected list of prompts. This represents the baseline model.

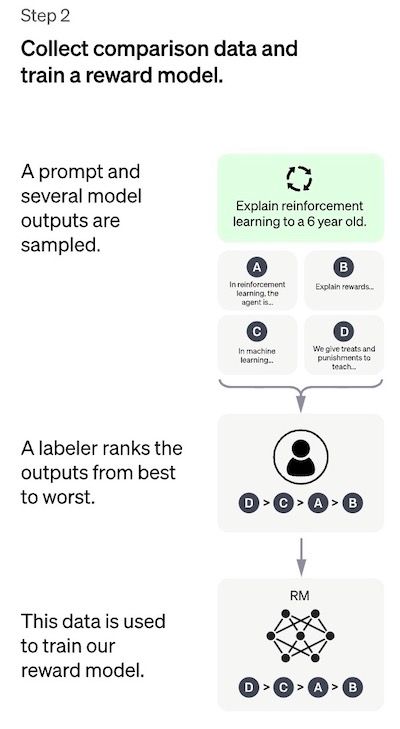

- “Mimic human preferences” – Labelers are asked to vote on a relatively large number of the SFT model outputs, this way creating a new dataset consisting of comparison data. A new model is trained on this dataset. This is referred to as the reward model (RM).

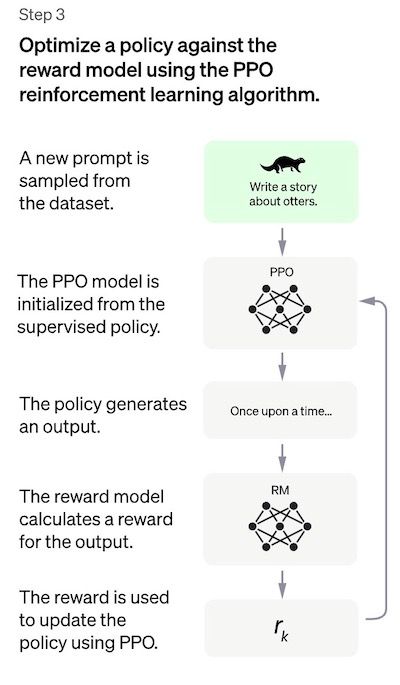

- Proximal Policy Optimization (PPO) – The reward model is used to further fine-tune and improve the SFT model. The outcome of this step is the so-called policy model.

Step 1 takes place only once, while steps 2 and 3 can be iterated continuously: more comparison data is collected on the current best policy model, which is used to train a new reward model and then a new policy.

Let’s now dive into the details of each step!

Note: The rest of this article is based on the content of the InstructGPT paper. According to OpenAI, ChatGPT has been trained “using the same methods as InstructGPT, but with slight differences in the data collection setup” (source). Unfortunately, exact quantitative reports have yet to be made publicly available for ChatGPT.

Step 1: The Supervised Fine-Tuning (SFT) model

The first step consists in collecting demonstration data in order to train a supervised policy model, referred to as the SFT model.

- Data collection: a list of prompts is selected and a group of human labelers are asked to write down the expected output response. For ChatGPT, two different sources of prompts have been used: some have been prepared directly from the labelers or developers, some have been sampled from OpenAI’s API requests (i.e. from their GPT-3 customers). As this whole process is slow and expensive, the result is a relatively small, high-quality curated dataset (of approximately 12-15k data points, presumably) that is to be used to fine-tune a pretrained language model.

- Choice of model: instead of fine-tuning the original GPT-3 model, the developers of ChatGPT opted for a pretrained model in the so-called GPT-3.5 series. Presumably the baseline model used is the latest one

text-davinci-003, a GPT-3 model which was fine-tuned mostly on programming code.

Quite interestingly, therefore, in order to create a general purpose chatbot like ChatGPT, the developers decided to fine-tune on top of a “code model” rather than a pure text model.

Due to the limited amount of data for this step, the SFT model obtained after this process is likely to output text which is still (probabilistically) not very user-attentive and generally suffers from misalignment, in the sense explained in the above sections. The problem here is that the supervised learning step suffers from high scalability costs.

To overcome this problem, instead of asking human labelers to create a much bigger curated dataset, a slow and costly process, the strategy is now to have the labelers rank different outputs of the SFT model to create a reward model –let’s explain this in more detail in the following section.

Step 2: The reward model (RM)

The goal is to learn an objective function (the reward model) directly from the data. The purpose of this function is to give a score to the SFT model outputs, proportional to how desirable these outputs are for humans. In practice, this will strongly reflect the specific preferences of the selected group of human labelers and the common guidelines which they agreed to follow. In the end, this process will extract from the data an automatic system that is supposed to mimic human preferences.

Here’s how it works:

- A list of prompts is selected and the SFT model generates multiple outputs (anywhere between 4 and 9) for each prompt.

- Labelers rank the outputs from best to worst. The result is a new labeled dataset, where the rankings are the labels. The size of this dataset is approximately 10 times bigger than the curated dataset used for the SFT model.

- This new data is used to train a reward model (RM). This model takes as input a few of the SFT model outputs and ranks them in order of preference.

As for labelers it is much easier to rank the outputs than to produce them from scratch, this process scales up much more efficiently. In practice, this dataset has been generated from a selection of 30-40k prompts, and a variable number of the generated outputs (for each prompt) is presented to the each labeler during the ranking phase.

Step 3: Fine-tuning the SFT model via Proximal Policy Optimization (PPO)

Reinforcement Learning is now applied to fine-tune the SFT policy by letting it optimize the reward model. The specific algorithm used is called Proximal Policy Optimization (PPO) and the fine-tuned model is referred to as the PPO model.

What is PPO? Here are the main takeaways of this method:

- PPO is an algorithm that is used to train agents in reinforcement learning. It is called an "on-policy" algorithm because it learns from and updates the current policy directly, rather than learning from past experiences as in "off-policy" algorithms like DQN (Deep Q-Network). This means that PPO is continuously adapting the current policy based on the actions that the agent is taking and the rewards it is receiving.

- PPO uses a trust region optimization method to train the policy, which means that it constrains the change in the policy to be within a certain distance of the previous policy in order to ensure stability. This is in contrast to other policy gradient methods which can sometimes make large updates to the policy that can destabilize learning.

- PPO uses a value function to estimate the expected return of a given state or action. The value function is used to compute the advantage function, which represents the difference between the expected return and the current return. The advantage function is then used to update the policy by comparing the action taken by the current policy to the action that would have been taken by the previous policy. This allows PPO to make more informed updates to the policy based on the estimated value of the actions being taken.

In this step, the PPO model is initialized from the SFT model, and the value function is initialized from the reward model. The environment is a bandit environment which presents a random prompt and expects a response to the prompt. Given the prompt and response, it produces a reward (determined by the reward model) and the episode ends. A per-token KL penalty is added from the SFT model at each token to mitigate over optimization of the reward model.

Performance Evaluation

Because the model is trained on human labelers input, the core part of the evaluation is also based on human input, i.e. it takes place by having labelers rate the quality of the model outputs. To avoid overfitting to the judgment of the labelers involved in the training phase, the test set uses prompts from held-out OpenAI customers which are not represented in the training data.

The model is evaluated on three high-level criteria:

- Helpfulness: judging the model’s ability to follow user instructions, as well as infer instructions.

- Truthfulness: judging the model’s tendency for hallucinations (making up facts) on closed-domain tasks. The model is evaluated on the TruthfulQA dataset.

- Harmlessness: the labelers evaluate whether the model’s output is appropriate, denigrates a protected class, or contains derogatory content. The model is also benchmarked on the RealToxicityPrompts and CrowS-Pairs datasets.

The model is also evaluated for zero-shot performance on traditional NLP tasks like question answering, reading comprehension, and summarization, on some of which the developers observed performance regressions compared to GPT-3. This is an example of an “alignment tax” where the RLHF-based alignment procedure comes at the cost of lower performance on certain tasks.

The performance regressions on these datasets can be greatly reduced with a trick called pre-train mix: during training of the PPO model via gradient descent, the gradient updates are computed by mixing the gradients of the SFT model and the PPO model.

Shortcomings of the methodology

A very clear limitation of the methodology, as discussed in the InstructGPT paper (on which ChatGPT is based, according to its creators) is the fact that, in the process of aligning language models with human intentions, the data for fine-tuning the models is influenced by an intricate variety of subjective factors, including:

- The preferences of the labelers who produce the demonstration data.

- The researchers who design the study and write the labeling instructions.

- The choice of prompts crafted by the developers or provided by the OpenAI customers.

- The labelers bias is both included in the reward model training (by ranking outputs) and in the model evaluation.

In particular, the authors point out the obvious fact that the labelers and researchers taking part in the training process may not be representative of all potential end users of the language model.

Apart from this clear “intrinsic” limitation, we want to point out a few other possible shortcomings of the method, problems not explicitly addressed, as well as some open questions:

Lack of control study: The reported results measure the performance of the final PPO model, taking the SFT model as the baseline. This can be misleading: how can we know the improvements are actually due to the RLHF? A proper (yet, expensive) control study would consist in investing the exact same amount of labeler-hours as those used to train the reward model into creating a larger curated SFT dataset with high-quality demonstration data. One would then be in the position to objectively measure the performance improvements of the RLHF methodology versus the supervised approach. In very simple terms, the lack of such a control study is leaving a fundamental question completely open: is RLHF actually doing a good job in aligning language models?

Lack of ground truth for the comparison data: labelers can often disagree on the ranking of the model’s outputs. Technically speaking, the risk is to add a high potential variance to the comparison data without any ground truth.

Human preferences are just not homogeneous: The RLHF method treats human preferences as if they were homogeneous and static. Assuming that all people share the same values would be an obvious stretch, at least on a large amount of topics of human knowledge. Some recent research is starting to tackle this open problem differently.

Prompt-stability testing for the reward model (RM): There seem to be no experiments investigating the sensitivity of the reward model in terms of changes in the input prompt. If two prompts are syntactically different but are semantically equivalent, can the RM show significant differences in the ranking of the model outputs? In simpler terms, how much does the quality of the prompt matter for the RM?

Wireheading-type issues: In RL approaches, the model can sometimes learn to manipulate its own reward system to achieve a desired outcome, leading to an “over optimized policy”. This can push the model recreating some patterns that for some unknown reason make the reward model score high (see Table 29 in this paper from OpenAI for an explicit example of this behavior in language modeling). ChatGPT puts a patch on this with the KL penalty term in the reward function. Note that one is trying to optimize the RM input (i.e. the PPO output) in order to improve its output (the reward score), all the while constraining the input itself to be not too far away from some reference input (the SFT output). More details on the limitations of this approach in this recent preprint.

Selected references for further reading

- The most relevant paper about the RLHF methodology used for ChatGPT is Training language models to follow instructions with human feedback, which in fact details a model called InstructGPT, referred to by OpenAI as a "sibling model" to ChatGPT.

- Anthropic published a detailed study on the effectiveness of RLHF methods for finetuning language models to act as helpful and harmless assistants.

- The paper Learning to summarize from Human Feedback describes RLHF in the context of text summarization.

- Proximal Policy Optimization: the PPO algorithm paper.

- Deep reinforcement learning from human preferences –was one of the earliest (Deep Learning) papers using human feedback in RL, in the context of Atari games.

- Alternatives to OpenAI's RLHF have been proposed by DeepMind in Sparrow and GopherCite papers.

- A deep dive into the Alignment problem for language models is given in a (long) paper by Anthropic. Here's an excellent summary by Sam Ringer. Anthropic also has an open source repository (with accompanying paper) for RLHF.

Enjoyed this article?

Follow our newsletter for more content like this!

Follow

FAQs

Does ChatGPT give correct answers? ›

No, ChatGPT does not give the exact same answer and wording to everyone who asks the same question. While it may generate similar responses for identical or similar queries, it can also produce different responses based on the specific context, phrasing, and quality of input provided by each user.

How does ChatGPT work so well? ›ChatGPT works by attempting to understand your prompt and then spitting out strings of words that it predicts will best answer your question, based on the data it was trained on.

Where does ChatGPT get its answers? ›ChatGPT is an AI language model that was trained on a large body of text from a variety of sources (e.g., Wikipedia, books, news articles, scientific journals).

How does ChatGPT work in simple terms? ›The model is updated based on how well its prediction matches the actual output. Through this process, the transformer learns to understand the context and relationships between words in a sequence, making it a powerful tool for natural language processing tasks such as language translation and text generation.

How often does ChatGPT give wrong answers? ›Very often. It cannot know the correct answer. It just generates text that conforms to what an answer might look like. There was one user who posted two answers contradicting each other.

How credible is ChatGPT? ›Is ChatGPT a credible source? No, ChatGPT is not a credible source of factual information and can't be cited for this purpose in academic writing. While it tries to provide accurate answers, it often gets things wrong because its responses are based on patterns, not facts and data.

Will ChatGPT replace programmers? ›In summary, while GPT has made significant progress in recent years, it is unlikely to replace human programmers entirely because it lacks the ability to execute code, think critically and solve complex problems, and generate new ideas.

How to make money with ChatGPT? ›- Find Unclaimed Money.

- Build an App, Website, or Service.

- Get Business Ideas From ChatGPT.

- Create an AI Chatbot.

- Email Affiliate Marketing.

- Create Videos with ChatGPT.

- Write e-Books and Self-Publish.

- Freelance and Create Content.

According to OpenAI, Chat GPT was trained using “Reinforcement Learning from Human Feedback” (RLHF).

What are the disadvantages of ChatGPT? ›The Disadvantages of ChatGPT. One of the biggest cons of ChatGPT or any other AI chatbot is that it cannot be used as an authoritative source of information. At the time of writing (April 2023), the technology still relies on content from the internet, as in 2021.

Why does ChatGPT give different answers to same question? ›

Yes, as an AI language model, I am capable of providing different answers to the same question. The reason for this is that my responses are generated based on patterns and relationships learned from analyzing vast amounts of human-written text.

Is ChatGPT owned by Google? ›Chat GPT is owned and developed by AI research and deployment company, OpenAI.

Does ChatGPT use the Internet? ›ChatGPT is not connected to the internet, and it can occasionally produce incorrect answers. It has limited knowledge of world and events after 2021 and may also occasionally produce harmful instructions or biased content. We'd recommend checking whether responses from the model are accurate or not.

Does ChatGPT use neural networks? ›At its core, ChatGPT technology is based on the principles of neural networks and machine learning. Neural networks are a type of artificial intelligence that are modeled after the structure and function of the human brain.

Does ChatGPT use machine learning? ›ChatGPT has been fine-tuned from a model in the GPT-3.5 series. It was trained on diverse data, including books, articles, and conversations, to understand various topics and contexts. It uses machine learning to generate human-like responses to text prompts.

Is Microsoft Copilot like ChatGPT? ›Copilot doesn't just connect ChatGPT with Microsoft 365; it combines the power of large language models (LLMs), including ChatGPT version 4, with your business data in the Microsoft Graph (including your calendar, emails, chats, documents, and meetings) and the Microsoft 365 apps.

What is the difference between OpenAI and ChatGPT? ›They both use similar generative AI models, but the main difference is that ChatGPT is designed for use by the general public while OpenAI Playground is more geared toward developers who want to experiment with OpenAI's various AI technologies.

Can chatbot give wrong answers? ›OpenAI, makers of chatbot rival ChatGPT, have been open about the limitations of their technology and have admitted it can sometimes write plausible-sounding but incorrect, or nonsensical, answers to humans' questions.

What is the success rate of ChatGPT? ›Chat GPT has an accuracy of 85+ %, which is a good percentage, and it can even write flawless code snippets, making it a valuable tool for software engineering.

Why does ChatGPT not give real sources? ›ChatGPT is based on a Large Language Model and does not have the ability to match relevant sources to any given topic.

Which jobs can ChatGPT replace? ›

- Customer service representatives.

- Technical writers.

- Translators and interpreters.

- Copywriters.

- Data entry clerks.

Conclusion. I come to the same conclusion as my colleague regarding Oracle DBA, ChatGPT will not replace a DevOps consultant yet as we can reach its limits when the topic becomes too complex or when we need a creative solution to an issue.

Is ChatGPT a threat to software engineers? ›ChatGPT is not a threat to software developers, but rather a tool that can help them produce more efficiently. ChatGPT is a language model that has been trained on a massive amount of data, allowing it to generate responses that are often indistinguishable from those of a human.

How to earn $1,000 dollars per day? ›- Sell off things you don't need.

- Get Paid to Do Market Research.

- Get Paid to Shop.

- Resell Sneakers.

- Sell an Online Course.

- Trade in Used Textbooks.

- Ask Your Boss for Overtime.

- Deliver Pizzas.

- Deliver groceries and goods. ...

- Walk dogs or pet-sit. ...

- Take online surveys. ...

- Become an Amazon reseller. ...

- Open your own Etsy shop. ...

- Rent a spare room in your home. ...

- Become a rideshare driver. ...

- Rent your car out.

According to the latest available data, ChatGPT currently has over 100 million users. And the website currently generates 1 billion visitors per month. This user and traffic growth was achieved in a record-breaking two-month period (from December 2022 to February 2023).

How much did ChatGPT cost to train? ›It took about 1 million GPU hours to train. With dedicated prices from AWS, that would cost over $2.4 million.

How much data is ChatGPT trained on? ›The model was trained using text databases from the internet. This included a whopping 570GB of data obtained from books, webtexts, Wikipedia, articles and other pieces of writing on the internet.

What is the pros and cons of ChatGPT? ›Pros of Chat GPT include improved natural language understanding, faster response times, and the ability to generate more natural-sounding conversations. Cons of Chat GPT include difficulty training models to respond appropriately to a wide range of topics and potential bias from the data used to train it.

Who benefits from ChatGPT? ›ChatGPT is a powerful tool for businesses looking to improve customer experiences, increase user satisfaction, and create content for search engine optimization. Additionally, this chatbot technology can be used to answer follow-up questions as well as respond to complex search query inputs.

How much text can ChatGPT handle? ›

ChatGPT has a hidden limit of about 500 words or 4,000 characters, but this depends on the request.

Does ChatGPT save your searches? ›ChatGPT does store your conversation history. This information is used to help provide better user experiences by having context for further conversations and allowing users to review previous discussion topics.

Is ChatGPT causing layoffs? ›U.S. companies expected layoffs caused by ChatGPT usage 2023

In a February 2023 survey, 33 percent of business leaders in the United States said that usage of ChatGPT will definitely cause more layoffs by the end of 2023.

New study finds that AI tools could more quickly handle at least half of the tasks that auditors, interpreters and writers do now. Accountants are among the professionals whose careers are most exposed to the capabilities of generative artificial intelligence, according to a new study.

Why is Google worried about ChatGPT? ›ChatGPT has sparked worry about the use and viability of conventional search engines, as the chatbot aims to provide answers to searches instead of just giving relevant links to users. ChatGPT is unlikely to replace Google search entirely in the near future.

Can I use ChatGPT without a phone number? ›Use Bing. Microsoft's search engine now has its own version of ChatGPT that you can use without verifying your phone number. You will need to have a Microsoft account, but unlike signing up through OpenAI, you can get a Microsoft account using a VoIP phone number (like the free ones available through Google Voice).

Can ChatGPT write code for a website? ›Yes, ChatGPT can write code for a website. It can generate code for HTML, CSS, and JavaScript. For back-end development, ChatGPT can also write code in Python, PHP and Ruby.

Does ChatGPT work offline? ›What is ChatGPT Offline: Works without Internet? Download ChatGPT on your device. You can use it without the internet connection.

What algorithm is ChatGPT based on? ›The original release of ChatGPT was based on GPT-3.5. A version based on GPT-4, the newest OpenAI model, was released on March 14, 2023, and is available for paid subscribers on a limited basis.

Is ChatGPT a deep learning AI? ›ChatGPT is an AI language model developed by OpenAI that uses deep learning to generate human-like text.

What is the difference between NLP and ChatGPT? ›

While NLP is a branch of artificial intelligence that focuses on making machines capable of understanding and processing human language, ChatGPT is a specific application of this technology, which uses NLP techniques to provide automated responses to questions and conversations with users.

Where does ChatGPT get its data? ›ChatGPT is an AI language model that was trained on a large body of text from a variety of sources (e.g., Wikipedia, books, news articles, scientific journals).

Does ChatGPT generate the same response? ›No, ChatGPT does not give the exact same answer and wording to everyone who asks the same question. While it may generate similar responses for identical or similar queries, it can also produce different responses based on the specific context, phrasing, and quality of input provided by each user.

How does ChatGPT Tokenize? ›ChatGPT and other LLMs rely on input text being broken into pieces. Each piece is about a word-sized sequence of characters or smaller. We call those sub-word tokens.

How accurate is ChatGPT zero? ›After analyzing more than 10M articles and text, some generated by AI and others written by humans, we developed ZeroGPT's algorithm with an accuracy rate of text detection higher than 98%.

Why does ChatGPT give different answers to the same prompt? ›Yes, as an AI language model, I am capable of providing different answers to the same question. The reason for this is that my responses are generated based on patterns and relationships learned from analyzing vast amounts of human-written text.

Is ChatGPT or Google better? ›While both ChatGPT and Google have their own unique capabilities, they are used for different purposes. ChatGPT is a sophisticated AI chatbot that is capable of understanding and responding to natural language, while Google is a powerful search engine that is used for finding specific information on the Internet.

Can teachers tell if you use ChatGPT? ›Can a teacher tell if you use ChatGPT? A teacher might be able to tell if you use ChatGPT. It depends on the quality and originality of the text, the modification, and the text's citation. It also depends on how familiar the teacher is with ChatGPT.

Can universities detect ChatGPT? ›Can Universities detect Chat GPT? It is now completely possible for universities to detect ChatGPT and many other AI content generators. If work is submitted through a university's learning management system, such as Turnitin, AI and plagiarism detection should happen.

Can professors tell if you use ChatGPT? ›Can universities detect ChatGPT code? Whether or not universities will know if you have copied code from Chat GPT, is tricky to answer. Technically, code will not be flagged by most plagiarism detectors, so your professors may have some difficulty actually identifying what is AI-generated or not.

How long does it take for ChatGPT to respond? ›

It can take as long as a few hours to wait out, but if you're patient, you'll get through eventually. Of all the problems facing ChatGPT right now, this had been the biggest hurdle for keeping people from using it more.

What is the limit of ChatGPT? ›What is the ChatGPT character limit? ChatGPT has a hidden limit of about 500 words or 4,000 characters, but this depends on the request. Users have reported that asking the AI for its official word or character limit results in a reply of "none," but that's not entirely correct.

What is the biggest problem with chatbots? ›- Not identifying the customer's use case. ...

- Not understanding customer emotion and intent. ...

- The chatbot lacks transparency. ...

- When customers prefer human agents. ...

- Not able to address personalized customer issues. ...

- Lacking data collection and analysis functions. ...

- Not aligning with the brand.

Setting unrealistic expectations is often the reason why chatbots fail. Most chatbots are based on a set of rules that dictate the answer to give to a specific question by drawing the necessary resources from a database.

How does Morgan Stanley use ChatGPT? ›About GPT-4

The solutions that Morgan Stanley Wealth Management are building do not use ChatGPT, which leverages GPT-3.5 and generates responses from the public internet. Morgan Stanley Wealth Management is using GPT-4 to generate responses exclusively from internal Morgan Stanley content, with appropriate controls.

According to McKinsey's The State of AI in 2022 report, the adoption of AI has more than doubled since 2017, with up to 60% of organisations using it in at least one business area, and IDC estimates that global spending on AI will reach US$154bn in 2023.

Is ChatGPT the best AI? ›The best overall AI chatbot is ChatGPT due to its exceptional performance, versatility, and free availability. It uses OpenAI's cutting-edge GPT-3 language model, making it highly proficient in various language tasks, including writing, summarization, translation, and conversation.

Is ChatGPT a threat to Google? ›ChatGPT, the high-profile AI chatbot from OpenAI, is such a serious threat to Google's core business that the company's co-founders are reengaged with the search giant, The New York Times reported Friday.